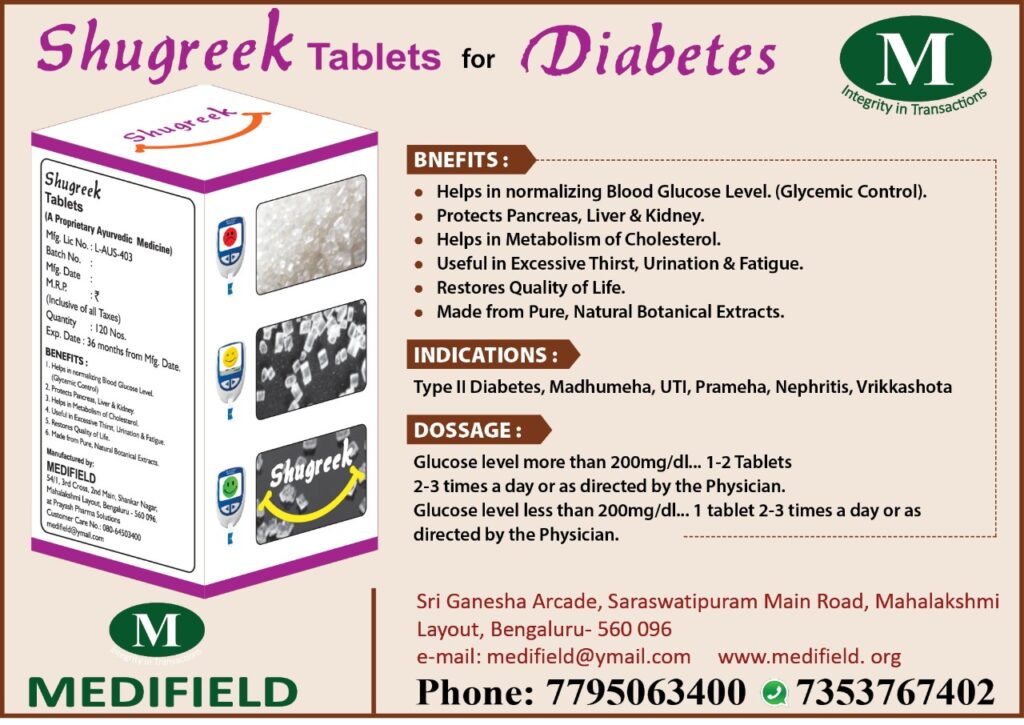

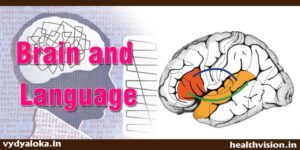

Brain and language makes for both a particularly important and difficult topic in neuroscience. Modern empirical work has demonstrated that language is integrated with, and in constant interplay with, an incredibly broad range of neural processes.

INTRODUCTION:

Languages exquisitely structured, complex, and diverse are a distinctively human gift, at the very heart of what it means to be human. As such, language makes for both a particularly important and difficult topic in neuroscience. A dominant early approach to the study of language was to treat it as a separate module or organ within the brain. However, much modern empirical work has demonstrated that language is integrated with, and in constant interplay with, an incredibly broad range of neural processes.

Neuroimaging, and developmental studies of language in the aging brain focusing on converging evidence regarding the highly interactive relationship between linguistic functions and other cognitive functions. A clear and comprehensive model explaining the functional neuroanatomy of language in the neurologically intact brain is still a work in progress. The newest attempts to propose such models represent a consistent shift towards accounts with increasing empirical and conceptual resolution that aim to capture the dynamic nature of the biological foundations of language. Better empirical resolution is now being accomplished through the enhanced level of detail with which temporal and spatial features of language-related brain activation patterns can be examined. Greater conceptual resolution involves the increasing level of specificity with which representations/operations underlying different language functions can be described.

Unlike other areas of neuroscience investigation (e.g., vision, motor action) that have relied heavily on invasive techniques with animal models, the study of language lacks any such model. Furthermore, in language, the relationship between the form of a signal and its meaning is largely arbitrary. For example, the sound of “blue” will likely have no relationship to the properties of light we experience as blue nor to the visual written form “blue,” will sound different across languages, and have no sound at all in signed languages. No equivalent of “blue” will even exist in many languages that might make fewer or more or different color distinctions. With respect to language, the meaning of a signal cannot be predicted from the physical properties of the signal available to the senses. Rather, the relationship is set by convention.

At the same time, language is a powerful engine of human intellect and creativity, allowing for endless recombination of words to generate an infinite number of new structures and ideas out of “old” elements. Language plays a central role in the human brain, from how we process colour to how we make moral judgments. It directs how we allocate visual attention, construe and remember events, categorize objects, encode smells and musical tones, stay oriented, reason about time, perform mental mathematics, make financial decisions, experience and express emotions, and on and on.

Indeed, a growing body of research is documenting how experience with language radically restructures the brain. People who were deprived of access to language as children (e.g., deaf individuals without access to speakers of sign languages) show patterns of neural connectivity that are radically different from those with early language exposure and are cognitively different from peers who had early language access. The later in life that first exposure to language occurs, the more pronounced and cemented the consequences. Further, speakers of different languages develop different cognitive skills and predispositions, as shaped by the structures and patterns of their languages. Experience with languages in different modalities (e.g., spoken versus signed) also develops predictable differences in cognitive abilities outside the boundaries of language. For example, speakers of sign languages develop different visuospatial attention skills than those who only use spoken language. Exposure to written language also restructures the brain, even when acquired late in life. Even seemingly surface properties, such as writing direction (left-to-right or right-to-left), have profound consequences for how people attend to, imagine, and organize information.

The normal human brain that is the subject of study in neuroscience is a “languaged” brain. It has come to be the way it is through a personal history of language use within an individual’s lifetime. It also actively and dynamically uses linguistic resources (the categories, constructions, and distinctions available in language) as it processes incoming information from across the senses.

Put simply, one cannot understand the human brain without understanding the contributions of language, both in the moment of thinking and as a formative force during earlier learning and experience. When we study language, we are getting a peek at the very essence of human nature. Languages—these deeply structured cultural objects that we inherit from prior generations—work alongside our biological inheritance to make human brains what they are.

Current brain-language models emerged in response to the classical Broca-Wernicke-Lichtheim-Geschwind lesion-deficit model of aphasia. In this model, language areas were localized in left-lateralized manner, with certain regions being predicted to lead to specific patterns of language impairment following brain damage. Thus, for example, the left posterior inferior frontal region, Broca’s area, was linked to speech production (where brain damage would result in articulatory problems); the left posterior temporal region, Wernicke’s area, to auditory speech recognition (where damage would yield impaired language comprehension); and the arcuate fasciculus connecting these anterior and posterior regions to repetition (where damage would impair production by repetition but preserve comprehension).

This schematic view of brain-language mappings has given rise to clinical classifications of aphasic syndromes, which to this day continue to guide aphasia research and clinical practice in many circles. Seven major aphasic syndromes have been proposed, with varying behavioral patterns and lesion loci Over time, however, serious clinical, biological, and psycholinguistic inadequacies of these mappings were identified. These include, for example, failure to account for the wide range of lesion-deficit patterns observed in aphasia (e.g., when a lesion to a certain area does not necessarily result in a predictable behavioral profile, or when lesions to multiple regions result in behavioral patterns that would otherwise be predicted for a different area altogether) or an inability to explain changes in behavioral patterns observed in aphasia over time (e.g., when a person first diagnosed with Wernicke’s aphasia presents later, in the chronic stage, with conduction-like behavioral patterns and/or anomic-like patterns). These changes are reportedly experienced by 30%–60% of patients, with anomia being the most common end result of all aphasia-producing lesions.

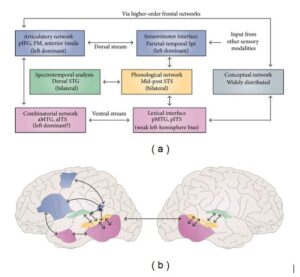

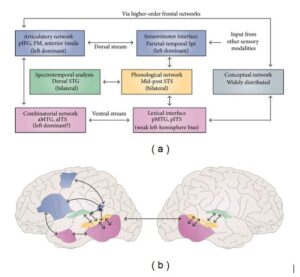

This model uses a dual-route neuroanatomical architecture—dorsal and ventral streams—borrowed from the field of visual processing and from animal models of auditory processing in primates to explain how auditory language proceeds. The ventral stream, also known as the “what” stream, is implicated in auditory recognition processes required for language comprehension, such as lexical semantic processing, mediated by neural networks projecting to different regions in the temporal lobe. The dorsal stream, termed the “where” stream, provides an interface for auditory and motor processing by performing phonological mappings of sound-to-articulatory representations, sub served by projections from auditory cortical circuits to temporoparietal and frontal networks. This architecture is shown in Figure 1.

Fig.-1: Dorsal-Ventral Streams (Adapted from Hickok and Poeppel)

Limitations of the classical model have been highlighted even further with the explosion of new findings emerging from studies using advanced techniques for measuring real-time brain activity, for example, hemodynamic changes in the brain through functional magnetic resonance imaging (fMRI), intrinsic brain connectivity through resting-state fMRI, or the time course of brain activation during task performance via electroencephalography (EEG) or magnetoencephalography (MEG). With these techniques, many new inter- and intra-hemispheric language-related neural networks have been identified (extending well beyond the core language areas, including cortical networks bilaterally, as well as subcortical circuits.

Price, for example, in a review of standard coordinates of peak activations found in over 100 fMRI studies published in 2009, identified an intricate web of neural networks, mediating different processes implicated in language comprehension and production. These included the following brain-language mappings: activation of the superior temporal gyri bilaterally for prelexical acoustic analysis and phonemic categorization of auditory stimulus, middle and inferior temporal cortex for meaningful speech, left angular gyrus and pars orbitalis in for semantic retrieval, superior temporal sulci bilaterally for sentence comprehension, and inferior frontal areas, posterior planum temporale, and ventral supramarginal gyrus for incomprehensible sentences (e.g., as a measure of plausibility). Speech production was found to activate additional neural networks, including left middle frontal cortex for word retrieval, independently of articulation; left anterior insula for articulatory planning, left putamen, presupplementary motor area, supplementary motor area, and motor cortex for overt speech initiation and execution; and anterior cingulate and bilateral head of caudate nuclei for response suppression during monitoring of speech output. Such data have clearly stimulated a need to create new models of the neuroanatomy of language, with greater neural and psycholinguistic specificity. Ideally, such models would spell out the specific links between formal operations associated with certain language functions, as well as the dynamic spatial and temporal neuronal pathways mediating them.

Conclusion: Results from neuroimaging, lesion studies, and studies of language in the aging brain provide compelling converging evidence for the concept of neural multifunctionality, a concept that has both theoretical and practical/clinical implications—theoretical with regard to models of brain-language relations and practical with regard to rehabilitation of persons with cognitive deficits as a consequence of brain damage.

The question remains, of course, of how a neurally multifunctional language system might work. Borrowing from recent developments in the memory literature, which emerged, in part, to account for apparent overlaps between the neural substrates mediating “what” and “how” memory functions, we propose to adopt a component process framework to language processing.

Under such a framework, linguistic information would be processed through a neural system of component processes, in which region-specific neural configurations contribute to multiple cognitive tasks simultaneously. The component interactions are conceived as “process-specific alliances.” These alliances are small brain regions temporarily recruited to accomplish a cognitive task, given specific task demands. Each component in the alliance has a specific function, and they combine together to give rise to a complex operation. These small neural “groups” disintegrate once task demands are met and are thus distinct from larger-scale networks, whose connectivity continues to be observed at rest. The links among the components in the stable larger-scale networks can affect which alliances are formed, but they do not directly determine them. This approach is aligned with our view of neural multifunctionality of language, whose operations rest on the interaction of “neural cohorts” sub serving multiple functions in cognitive, emotional, motor, and perceptual domains.

The neural multifunctionality approach we propose here will allow the re-evaluation of current concepts of recovery from aphasia, focusing on the dynamic development of new neural support systems in the aphasic brain in service of new functions. We propose that this multifunctionality operates in a multidirectional and reciprocal fashion, such that neural networks engaged in language recovery mutually interact with neural supports of non-linguistic functions so as to give rise to new functional neuroanatomies (i.e., newly established or newly reinforced neural networks) in the neurologically compromised brain.

Also Read: Brain : The King and a King maker

Prof. (Dr) Vivek Kumar Jha

Principal

Department of Audiology and Speech Language Pathology

Sumandeep Vidyapeeth (Deemed to be University)

Vadodara, Gujarat

Mob.No.-9560276840

Email ID: jhavivek98@yahoo.com